In today’s high-speed digital world, natural language processing (NLP) plays an important role in applications ranging from chatbots and virtual assistants to sentiment analysis and automated translation. The ability to recognize and interpret human language is critical to these tasks, and manufacturers are looking for ways to improve NLP models every day.

One of the breakthroughs in this area is Distilbert. An application that will revolutionize the way NLP tasks are performed, Distilbert, created by Hugger, offers a smaller, edgier version of the unique Bert model. It invites superior quality in the areas of speed and performance without compromising accuracy. The application uses an advanced transformer-based architecture for word processing and extraction of critical information.

Distilbert’s most important advantage is its improved computational efficiency. Its smaller size and faster processing speed allow manufacturers to perform NLP tasks faster and more efficiently. This is even more advantageous when using huge data sets or real-world applications where speed is essential. Whether you are analyzing customer reviews or running sentiment tests on public network data, Distilbert has options to significantly reduce processing time and improve joint performance.

In addition, Distilbert invites optimized deployment of sources without compromising accuracy and performance. It carefully preserves the active core skills and semantic insights of the unique Bert model. In short, the manufacturer has a highly effective tool for a wide range of NLP tasks. This makes it the perfect choice for manufacturers and researchers looking to simplify their workflow and achieve reliable results.

Distilbert support makes the power of the latest NLP models available to a wider audience, enabling more efficient and accurate text processing and analysis across a wide range of domains.

Improve Natural Language Processing with Distilbert Support

Distilbert is a powerful tool that has revolutionized the field of Natural Language Processing (NLP), opening up new possibilities for all kinds of NLP tasks thanks to Distilbert’s ability to perceive and generate human language. In this section, we will discuss several ways in which Distilbert NLP improves

1. language awareness: Distilbert learns from Corus’s big words to understand the complexity of natural language. He can identify and extract relevant information from unstructured words. This makes him a valuable asset for tasks such as sentiment testing, word classification, and object detection.

2. word generation: In addition to word awareness, Distilbert has the opportunity to generate human words. This includes access to possibilities for tasks such as machine translation, text summarization, dialogue generation, etc. Distilbert can be used to mechanically generate coherent and contextually relevant words.

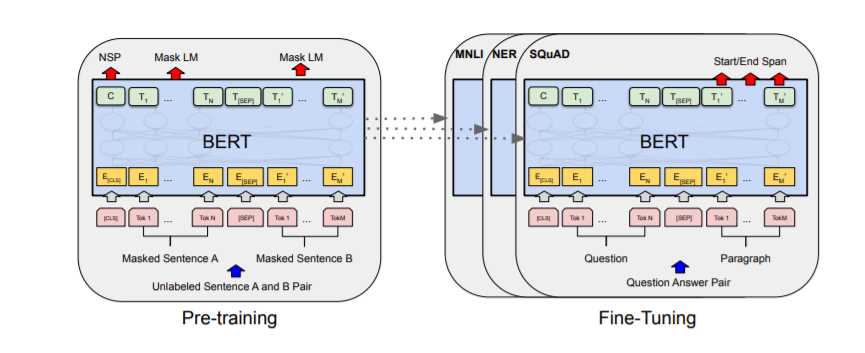

3. learning offerings. one of the most important advantages of Distilbert is that it is transferable and learnable. By first preparing for the acquisition of group words, Distilbert explores joint language representations that are likely to be literally tailored to specific NLP tasks with a low amount of additive learning. This reduces the size of labeled data, which is important for learning, and speeds up the development of NLP models.

4. support for multiple languages: Distilbert is trained on words in multiple languages, allowing it to recognize and generate words in different languages. This makes it a valuable inventory for tasks such as cross-language word classification and machine translation; in Distilbert, language is no longer seen as an obstacle to NLP.

5. efficiency: compared to his predecessor, Bart, Distilbert is a compressed version that invites similar performance but with less computing power. This makes it more readily available to run NLP models on devices with limited computing power.

In this way, Distilbert improves natural language processing through improved language awareness and the generation of better shorthand. The potential for transfer studies, multilingual help, and efficiency makes it a versatile inventory for a wide range of NLP tasks; with Distilbert, the possibilities for NLP are endless.

Leave a Comment