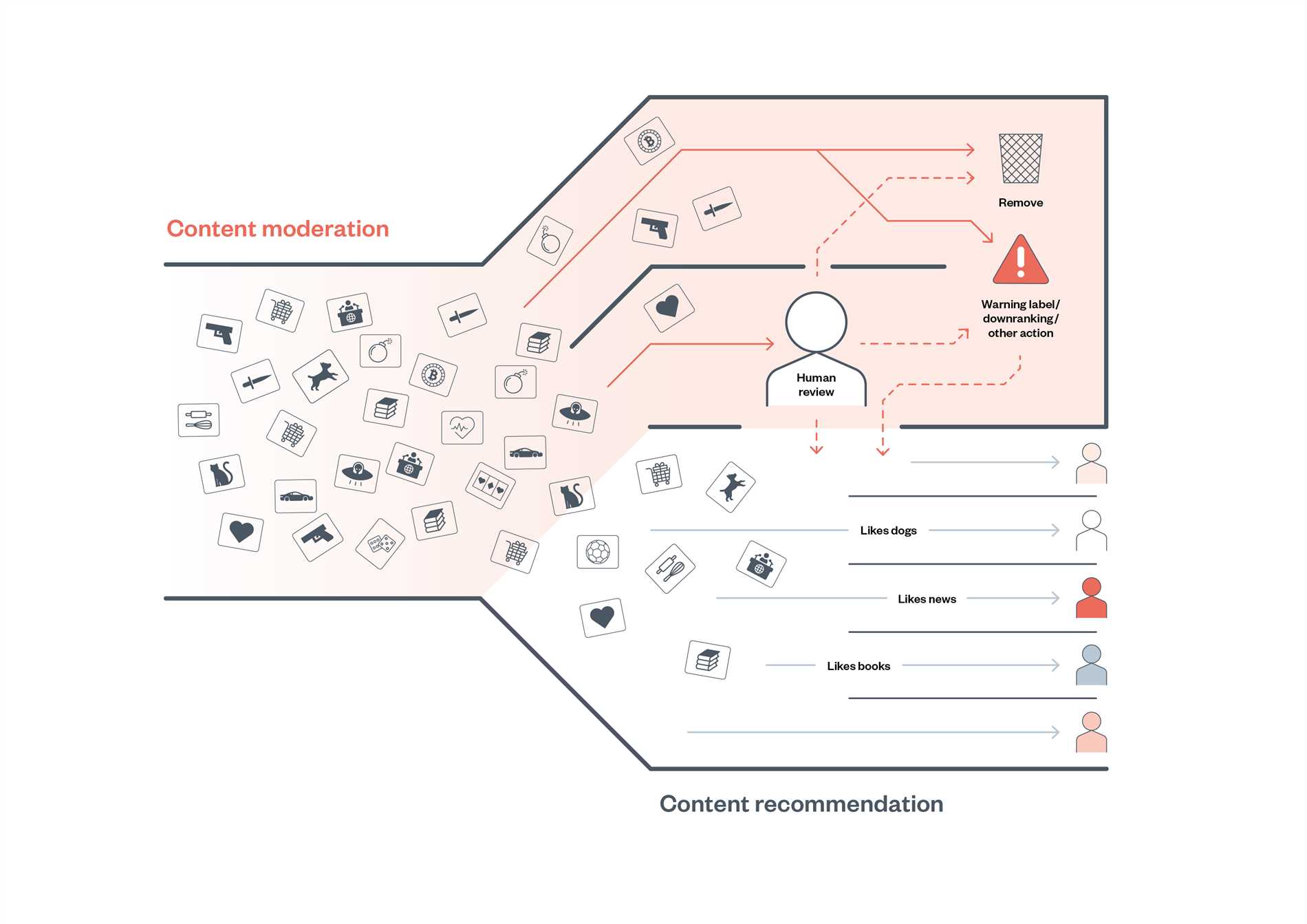

With the rapid growth of online content, the need for effective image moderation is greater than ever. From public network platforms to e-commerce sites, maintaining a non-harmful and easy-to-use environment is an important value. This is where an innovative solution for self-service image moderation comes around the corner.

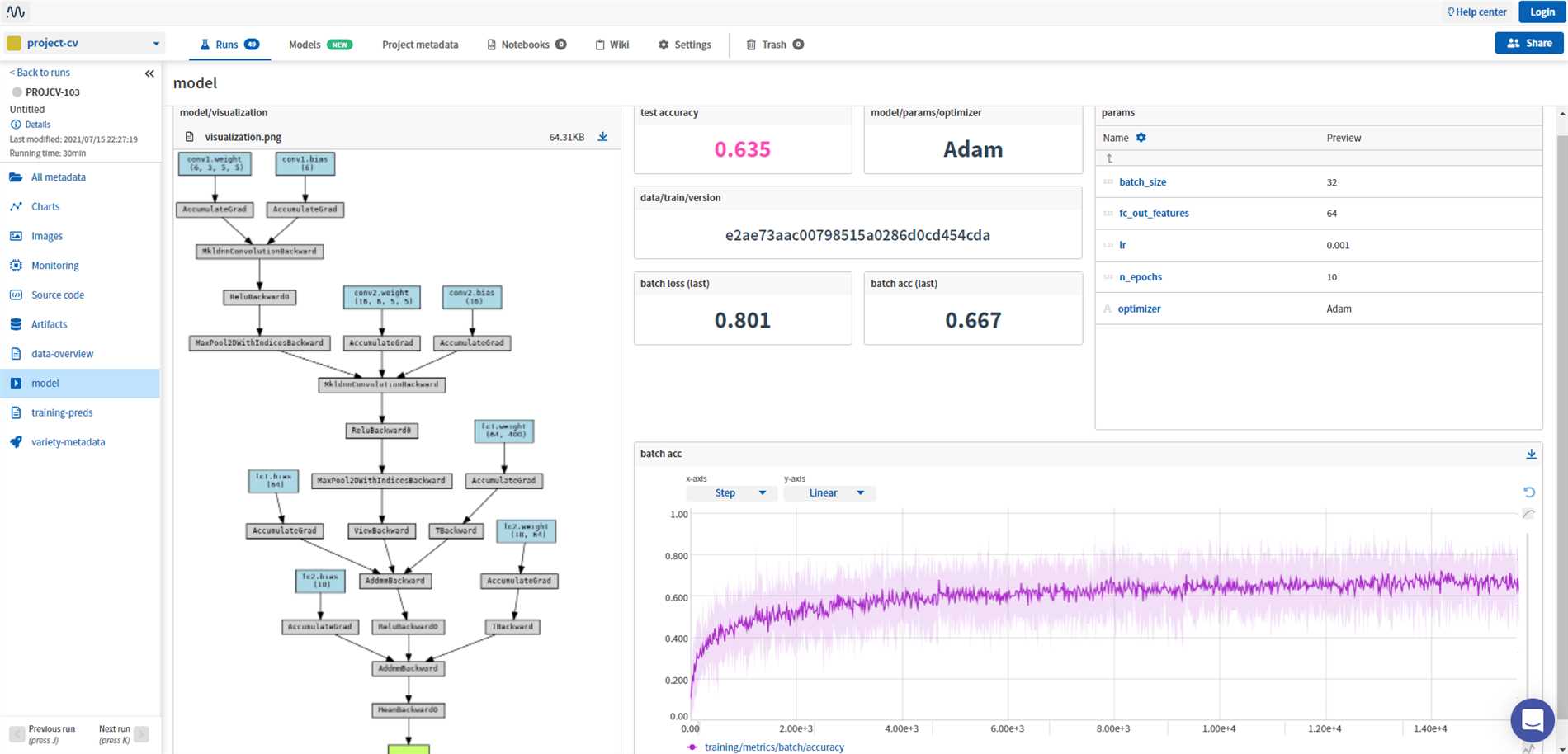

Arthurai uses advanced artificial intelligence and machine learning techniques to analyze images in real time and identify potentially harmful or inappropriate content. This advanced development not only saves time and resources, but also ensures a high degree of accuracy and reliability in content moderation.

Thanks to a deep learning model, Arthurai is able to detect a wide range of visual content, including nudity, coercion, hateful swear words, and junk. His mental method learns every day and adapts to new patterns and trends over time, making him very efficient and effective at mitigating large images.

Advanced tools for moderated images: Arthurai

In an advanced digital age where billions of images are distributed online every day, the need for effective image moderation is greater than ever. The rise of public grid platforms, online marketing, and sites for sharing content make it increasingly difficult to manually evaluate and moderate all uploaded images.

That is where Arturai comes in around the corner; Arturai is considered the most advanced closure of self-service image moderation. With the help of advanced machine learning techniques and false scarcity, Arturai is able to analyze and organize images in real time so that inappropriate or harmful content is filtered out before it reaches the viewer.

Arturai uses deep learning models to understand the context and tables of image content, as opposed to classical image mitigation methods that rely on manual crawling or filtering. This allows us to literally determine and block images of pre-obvious, violent, or otherwise unwanted material.

Arturai’s image moderation option is based on a large dataset of labeled images that are carefully curated and annotated for the machine learning model. When a new image is uploaded, Arturai links it to a wide database and uses its own insights to determine if the image is labeled.

To allow companies and manufacturers to integrate Arthurai into their own platforms, the conclusions are available as an API. This means that Arthurai’s image moderation options can be easily plugged into existing systems, such as public network front-ends, e-commerce sites, and content management systems.

With the help of Arturai’s API, manufacturers can get real-time feedback on image safety and make reasoned decisions about accepting, rejecting, or investigating follow-ups. This not only helps companies maintain a safe and respected online environment, but also saves time and resources spent on manual evaluation of images.

With his advanced technology and massive image mitigation opportunities, Arturai creates the future of self-service content moderati As online communities continue to grow and evolve, the need for efficient and reliable moderatite tools will only increase; with Arturai, companies can be sure that their platform is secure and their users are safe.

Leave a Comment